“Don’t put words in my mouth,” we often say. But apparently this mischief is something we enjoy. Just take a look at the 800 million total video views on Bad Lip Reading’s YouTube channel. If you’re unfamiliar, BLR takes existing video and masterfully dubs new audio that matches the lip movements, often to hilarious effect. As perfectly synced as those videos are though, they’re still lacking in one crucial way: the dubbed audio isn’t in the subject’s actual voice. But what if it was?

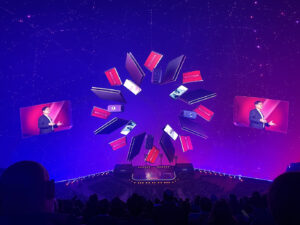

During the Sneaks portion of Adobe’s MAX 2016 conference, presenter Zeyu Jin unveiled an experimental project – titled VoCo – that could take BLR to the next level. “You guys have been making weird stuff online with photo editing,” he said to a chorus of laughter. “Let’s do the next thing today: let’s do something to human speech.” In the brief, five-minute demo that followed, Jin did just that, using the new software to edit the phrase “I kissed my dogs and my wife” (voiced by actor and comedian Keegan-Michael Key) to instead say, “I kissed Jordan three times” (referring to fellow comedian and event co-host Jordan Peele).

This kind of manipulation isn’t new, of course. Technically, anyone has been able to do this using audio waveform editors since at least 2000. Using Audacity, for example, anyone can take a snippet of someone saying one thing and make it sound like they said another by carefully cutting words out of the original audio and then rearranging them. No matter how careful you are, though, the result will have a certain choppiness to it since real human speech frequently changes in pitch, tempo, and tone. And, even with unlimited patience, it’s virtually impossible to create natural-sounding words using this method. These are all obstacles that VoCo appears to eliminate, supposedly requiring only 20 minutes of sample audio before it can automatically generate whatever authentic-sounding words and phrases you want via text-to-speech.

Imagine being able to correct a flubbed word in a podcast without redoing the whole segment. But not everyone is happy about it. In an interview with the BBC, University of Stirling media and technologies lecturer Dr. Eddy Borges Rey noted his discomfort with VoCo. “It seems that Adobe’s programmers were swept along with the excitement of creating something as innovative as a voice manipulator,” he said, “and ignored the ethical dilemmas brought up by its potential misuse.” Just think of the countless fake photos created on Photoshop since it hit the market as an example.

Around the same time that VoCo was revealed, a group of researchers from the University of Erlangen-Nuremberg, the Max Planck Institute for Informatics, and Stanford University published a paper called “Face2Face: Real-time Face Capture and Reenactment of RGB Videos.” This paper detailed their work on software that can manipulate the facial expressions and mouth movements of a subject in a YouTube video in real-time. While the result does occasionally look a bit rubbery (dipping ever so slightly into the uncanny valley), it’s good enough that it could be immediately applicable to “on-the-fly dubbing” of videos with translated audio (at least according to the researchers behind the project).

Around the same time that VoCo was revealed, a group of researchers from the University of Erlangen-Nuremberg, the Max Planck Institute for Informatics, and Stanford University published a paper called “Face2Face: Real-time Face Capture and Reenactment of RGB Videos.” This paper detailed their work on software that can manipulate the facial expressions and mouth movements of a subject in a YouTube video in real-time. While the result does occasionally look a bit rubbery (dipping ever so slightly into the uncanny valley), it’s good enough that it could be immediately applicable to “on-the-fly dubbing” of videos with translated audio (at least according to the researchers behind the project).

With fake news becoming a real issue, Dr. Rey’s apprehension is certainly understandable. As far as news goes, audio and video have long stood as media that require too much time and skill for the average person to convincingly edit, making them something that can usually be taken as genuine (when they come from a reputable source, of course). With VoCo, and now Face2Face, the “potential misuse” that Dr. Rey expressed concern about isn’t exactly unfounded. But could a fabricated piece of media using one, the other, or a combination of the two actually fool us?

According to Catalin Grigoras, director of the National Center for Media Forensics, the answer is no. “Those topics sound hot for the public, but they are very simple for forensics,” he wrote in an email correspondence. In addition to the obvious visual issues with Face2Face, he noted, “There are already tools [and] methods to identify Face2Face artifacts based on the facial/mouth digital image processing imperfections.”

Grigoras said something similar about VoCo. While it could be cool for film and television (just like Face2Face), he explained that “We already have the solution to detect the artifacts based on audio authentication. VoCo can cheat on the voice but not (yet) on the mathematics of the audio signal.” Basically, while it may fool the human ear, VoCo-generated audio can still be distinguished by forensics.

So, even if VoCo and Face2Face do eventually reach some level of commercial availability, it seems unlikely that they could be used by some ne’er-do-well, though not for lack of effort. “Any type of intentional attempts at forgery will likely leave trace artifacts of forensic value,” said Jose Ramirez, a forensic audio and video analyst at the Houston Forensic Science Center. Just like ballistic forensics can use the striations on a bullet to link it to a specific gun, digital forensics technicians can use the unique traces left in audio and video files to connect them with the devices that recorded them.

Regarding fake videos, Ramirez added that even if “these spoofed recordings…fool human perception, forensic analysis may help the analyst determine the authenticity of the recorded content.”

One last thing to remember is that both VoCo and Face2Face are just demos at this point. VoCo’s presentation, in particular, could be viewed as a publicity stunt for Adobe. As one user pointed out in a tech forum at Y Combinator, “If Adobe wanted to…convince anyone this was a real product that exists and is capable of synthesizing speech on the fly, then they’d toss a beach ball around the audience and have them shout out words to type.” And that’s a fair point. Sure, it seems like it works, but does it work well enough to be used for good or evil? Will it ever be used at all?

While real-time facial manipulation is impressive, Face2Face is likely many years away from being anywhere close to photorealistic. For instance, it took several months and millions of dollars to digitally resurrect Peter Cushing for Rogue One, and Grand Moff Tarkin still looked a little off. And VoCo, while facing fewer limitations, might not reach its full potential either. Adobe has hinted that it may never be released at all. Nevertheless, the existence of these programs – no matter how prototypical – presents plenty of exciting, and troubling, possibilities.

Images courtesy of Adobe.