On the popular television show Star Trek: Voyager, the health of the crew of the U.S.S. Voyager was constantly in jeopardy. Like all starships, a trained doctor was onboard to care for the well-being of the crew; however, Voyager’s resident physician wasn’t a human, nor an alien, but a computer hologram. This holographic doctor had the capability to not only diagnose an injury or illness, but also treat ailments and perform surgical procedures to save a crew member’s life or transform him or her into another alien race.

While we may not yet have Star Trek’s doctor to operate on us, technology is allowing surgery to advance beyond just a set of stainless steel tools and a talented physician. A skilled surgeon is still essential, but new surgical tools are allowing them to take their talents even further. For patients, this could mean better outcomes with a faster and less painful recovery. Here’s a look at some notable technologies you may see the next time you go “under the knife.”

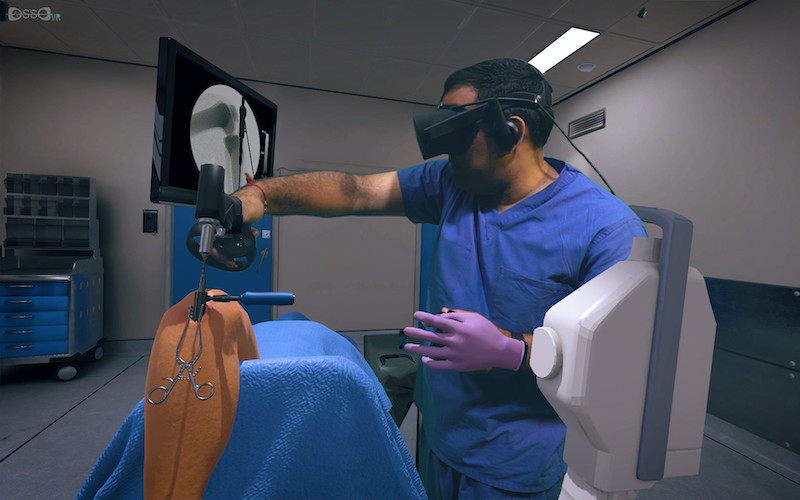

#1 VR in the OR

Before a surgeon even steps foot into an operating room, technology helps train them on the various incisions and maneuvers they’ll need to perform.

California-based Osso VR is using the immersive experiences that virtual reality (VR) can create to train surgeons on orthopedic surgical procedures. Using the Osso VR program, which is hardware-agnostic, users can freely look and move around a simulated operating theater with a VR headset and use handheld controllers to manipulate virtual tools and devices in the right sequence and “operate” on a patient with accurate and precise movements. The experience is not a video game though; the program grades your performance based on time, accuracy, and other metrics on which surgeons are typically evaluated. The company recently added multiple user support, allowing users to train as a team or participate as a coach or mentor.

In addition to providing higher-quality, interactive training, VR can also save on costs. “The cost to live train a surgeon can be anywhere from $50,000 to $400,000,” says Osso VR’s CEO, Dr. Justin Barad. “Our technology cuts those costs and increases the training’s value beyond a one-time impact. It limits the need to ship expensive equipment and book flights, while removing the need for a surgeon to take time away from patients.”

By utilizing VR for medical training, more doctors and surgeons can be better educated and prepared, as well as train as often as they need on their own time before working on real patients. Surgical procedures will be safer and patients will also feel at ease knowing their doctor is competent and well-trained.

#2 Getting a Better View

X-rays were once the gold standard for non-invasively viewing the insides of a patient. While they’re still popular today, other imaging modalities, such as ultrasound, magnetic resonance imaging (MRI), and computer-aided tomography (CT), allow doctors to see anatomical features that are hidden in x-rays.

These modalities have revolutionized medicine: CT gives dimension to flat x-rays to help diagnose traumatic injury and monitor the progress of some forms of cancer, and MRI is commonly used for sports and movement-related injuries and can even show active parts of the brain.

In the case of oncology, these modalities can reveal much about the structure of a potential tumor. However, tumors can be deceptive, often looking and feeling very similar to the healthy tissue around it.

One feature that differentiates healthy tissue from cancerous imposters is the oxygen content present in the tissue, and a new method called optoacoustic imaging can help reveal this. Optoacoustic imaging involves directing bursts of laser light at a potential tumor. Each burst causes the tissue to heat up and expand slightly, causing a small mechanical wave to propagate through the surrounding tissue. These waves can be detected using an ultrasound transducer and converted to high-resolution images with green or red highlights indicating the oxygen concentration to help the oncologist determine what is cancerous.

Although all of these imaging modalities have their limits on what they can scan accurately, they can be combined or supplemented with other types of clinical scans to fill in the gaps, opening up a whole new realm of clinical applications. Managing and interpreting such a wide range of imaging data requires a flexible, cloud-based infrastructure. Platforms like Studycast make it easier for clinicians to store, access, and share multimodality studies, from X-rays and MRIs to newer imaging techniques, within a single, secure environment.

Take for example Texas-based Seno Medical: they’re developing a technology that combines the optoacoustic imaging technology described above with conventional ultrasound imaging. The resulting high-resolution image will be able to show the location, shape, and oxygen content of breast cancer tumors to help provide a clearer diagnosis and more effective treatment plan.

Another technology developed at Purdue University uses functional MRI to create images of the brain combined with electroencephalography (EEG), a commonly used method of recording brain signals. The result is a powerful method of monitoring electrical brain signals while visualizing the parts of the brain that are actively triggering them. Such technology could give new insights into the progression and treatment of neurological diseases and traumatic brain injuries.

While most doctors view medical images on a regular computer monitor, advancements in computer-generated graphics, holography, and augmented/mixed reality are allowing them to view, manipulate, and even virtually fly through three-dimensional scans of a body part.

One company, California-based EchoPixel, uses a patient’s CT, MRI, or ultrasound scans to generate a 3D holographic image. The resulting hologram can be viewed on a special monitor with a pair of 3D glasses and manipulated with a stylus to better familiarize a surgeon with a patient’s unique anatomy for better surgery planning.

Several companies are foregoing the monitor altogether by integrating 3D images into Microsoft’s popular HoloLens headset. The augmented reality overlays and mixes virtual objects and images in the wearer’s view with the real world. In the operating room, HoloLens is being tested in many functions, from displaying a patient’s live vitals to identifying blood and bones underneath the skin, to even designing the layout of operating rooms themselves.

#3 Smart Scalpels

Scalpels and surgical knives are the workhorses of every operating room toolkit. Though ultra-sharp and effective at cutting and slicing, they’re not so great at differentiating between an extraneous bit of human tissue to be excised and a critical body part keeping the patient alive. This is vital, especially in cancer surgery, where the goal of the operation is to remove as much of the cancer from the body as possible while leaving healthy tissue intact. Several companies have developed smart surgical tools that can rapidly analyze the composition of the tissue it touches to determine whether it is cancerous or healthy.

The iKnife, developed by the Imperial College London, consists of an electrosurgical knife connected to a device called a mass spectrometer. As the iKnife’s electrified blade cuts through tissue, smoke is given off and is channeled into the mass spectrometer, which analyzes the chemicals in the smoke to determine if it’s malignant.

Although it doesn’t cut, Mexican researcher David Oliva Uribe has developed a handheld tool that can warn the surgeon in less than half a second with visual or auditory notifications about the kind of tissue being touched. His “smart scalpel” accomplishes this by measuring the extremely small electrical currents that the scalpel’s tip generates when it makes contact with various kinds of tissue, a phenomenon known as the piezoelectric effect.

Related: SmartPhone MD: How a Mobile Device Can Save Your Life

#4 Robot, M.D.

In our Summer 2018 issue, we mentioned the “da Vinci,” a surgical robot from California-based Intuitive Surgical that acts as replacement hands under the control of a human surgeon at a nearby terminal. But while the da Vinci is currently the most well-known robotic surgery system, it isn’t the only bionic player in the field.

Over the past several years, many companies have developed robotic surgery systems with high-tech features that make robotic surgery feel a little more natural to the human surgeon.

North Carolina-based TransEnterix has developed the “Senhance” system which is currently being used to repair hernias, remove gallbladders, and treat other various urologic and gynecologic conditions. Though it operates similarly to the da Vinci, the Senhance system features haptic feedback in the controls to let the surgeon “feel” when he or she comes in contact with other anatomical structures. Additionally, the instrument controls are more ergonomic and better resemble traditional surgical instruments, which could help the surgeon transfer skills developed during conventional surgery to the robotic system. The Senhance system also features an eye-controlled camera that produces 3D images to further enhance the surgeon’s point-of-view.

Tech behemoth Google is also dipping their toe into the robotic surgery space. They’ve partnered with medical giant Johnson & Johnson to start Verb Surgical, a company that will use robotic technology to pioneer what they term “Surgery 4.0.” Very little is known yet about Verb’s surgical robot, but according to the company, it will make use of the latest in robotics, instrumentation, imagery, and connectivity technology. Google-powered big data and machine learning will naturally also play a major role to help surgeons make better, more informed decisions in the middle of an operation.

The future of surgery will involve a close partnership with human doctors and medical innovation. While robots and medical technology can’t replace a human surgeon, they can help their human counterparts recognize their limitations and augment their skills. With the help of technology, surgeons can make better clinical decisions that can lead to a safer, more successful operation.