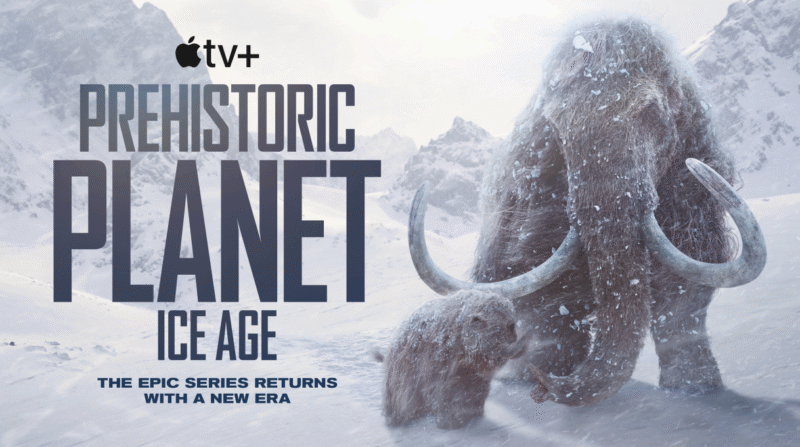

When Prehistoric Planet returned with Ice Age, audiences anticipated bigger creatures, harsher environments, and even more seamless natural-history realism. What truly defied expectations, and makes the new season such a technical milestone, is that nearly every shot sits at the precipice of current visual effects technology.

The five-part docuseries on Apple TV+ takes viewers on a journey back in time through millions of years. For Framestore VFX Supervisor Russell Dodgson and Showrunner/Executive Producer Matthew Thompson, the assignment was to imagine extinct megafauna while transporting audiences into the Pleistocene with a 1,000mm lens.

That meant replicating every nuance of the experience, starting with snow sticking to fur properly. It meant mammoths carrying real weight in each step. It meant breath vapor moving with the right viscosity in -40° air. Most importantly, it meant giving cinematographers something physical to respond to: a puppet in the Arctic wilderness.

What emerged is one of the most technologically sophisticated natural-history productions ever attempted, powered by upgraded simulation tools, new fur-interaction systems, cutting-edge previsualization (previs), and the timeless analog craft of puppetry.

Rethinking How Fur Behaves, How Snow Lives

Dodgson and Thompson didn’t work on the first two seasons, but they arrived with a clear sense of how dramatically the VFX toolset had evolved.

“Four or five years ago, we would’ve put more limitations on what was possible,” Dodgson said. “But every part of the pipeline—rigging workflows, fat-and-muscle systems, simulation tools—has gone through a full new generation.”

Fur was the big hurdle. Traditional fur simulation relies on “guide hairs,” where only a small percentage of hairs are actively simulated and the rest follow along. It breaks spectacularly when two furry creatures interact, fight, or roll together which Ice Age does constantly.

Framestore had to redevelop its entire fur-simulation system, increasing density and inventing new ways for fur to collide and respond naturally. Houdini became a critical partner, especially for the way fur interacted with snow. As the team quickly learned, snow is not one size fits all.

“At -40, snow behaves like crystals,” Dodgson explained. “But wet snow clumps. Deep snow deforms differently than surface snow. We had to educate ourselves on all of it.”

This realism extended to physics as every animation was created in real time but reviewed in slow motion. The same technique used in natural-history filmmaking was utilized, forcing the team to retime simulations without losing the tactile weight that sells a creature’s presence.

Puppetry, the Practical Secret Weapon

For all the cutting-edge tech, one of Ice Age’s most important innovations stayed old-school: practical puppetry. Executive Producer Jon Favreau reportedly understood entire sequences emotionally just from watching the puppet edit.

That’s how strong the reference was. Not for final animation, but for something Dodgson calls “technical reference puppetry” as a way to shape camera behavior and shot rhythm with real, physical cues.

“You’ve got this very digital, highly technical field on one side and traditional natural-history filmmaking on the other,” he said. “The puppets are the timeless thing bridging the two.”

The team trekked into remote environments like Svalbard, lugging puppet cases across kilometers of frozen terrain. All of this so that cinematographers could film “creature stand-ins” the way they would shoot real wildlife. Long lenses, imperfect handheld motion, heat haze, micro-adjustments were kept in focus with human instinct baked into every frame.

The puppets informed lighting, blocking, emotional rhythm, and even editorial choices. In some cases, the puppet pass was compelling enough that it was partially used in the final shot after paint-outs and camera-tracking magic.

Previs, Unreal, and a Flexible Digital-Analog Loop

Every frame of Ice Age was prevised using a combination of Unreal Engine and the new Maya viewport renderer. But unlike in many VFX-heavy productions, previs was never treated as locked gospel.

“It’s your last best idea,” Dodgson said. “Then you get to Patagonia and the light is better on the next hill over, so you change it. The goal is to keep leveling up the shot.”

This flexible digital-analog interplay became a core creative engine of the show. The team would plan with tech, respond with instinct, and constantly override one with the other when the moment demanded.

Love+War Captures the Dualities of Life in Conflict Zones

When Pulitzer Prize-winning photojournalist Lynsey Addario enters a war zone, her camera becomes both shield and confession. For more than two decades, she has documented humanity’s most fragile moments… Continue reading

No AI, But Plenty of Machine Learning

For readers wondering how far generative AI played into a production like this, the short answer: it didn’t.

“We didn’t use AI for any output,” Dodgson said. “And honestly, there is no dataset for an AI to start from until we create one. Nobody has footage of a mammoth behaving realistically.”

Machine learning does exist under the hood, assisting rigging and muscle systems, but every final pixel is artist-driven.

Building Animals That Can Withstand Scrutiny

One of the hardest parts of the show is the same thing that makes natural history so hypnotic: the camera lingers. No quick edits. No hiding.

“There’s not one shot without massive creatures,” Dodgson said. “You’re hanging on them for 10–15 seconds, which means people can marvel. They can look for problems.”

Framestore’s new FAT system (Framestore Anatomy Tool) was essential here, giving riggers and animators a far more accurate simulation of how fat, skin, muscle, and fur move in unison. Even the deformation of eyelids based on corneal thickness was painstakingly modeled because in long shots, the audience can feel those differences.

Nowadays, audiences are becoming more visually fluent. Not necessarily in VFX, but in animals.

“We’re surrounded by mammals,” Thompson said. “People intuitively know how they move. That’s the real bar.”

The Future of Natural-History VFX

Prehistoric Planet: Ice Age may be one of the best showcases yet for what happens when digital precision and analog intuition meet halfway. It’s a production that demanded new tools, new workflows, and new problem-solving every day. It also clings to posterity, showcasing real objects in real space informing real human decisions.

Projects like this remind us how the frontier of visual effects is only enhanced by technological firepower, while bringing a puppet into the Arctic ensures that each pixel that follows has a soul.